Introduction

When creating load testing scripts with k6, questions arise about how to properly test load scenarios.

There are several testing methods, including unit tests, integration tests, and more.

In this article, I’ll introduce a method for testing load scenarios by preparing a mock API server.

The Conclusion First

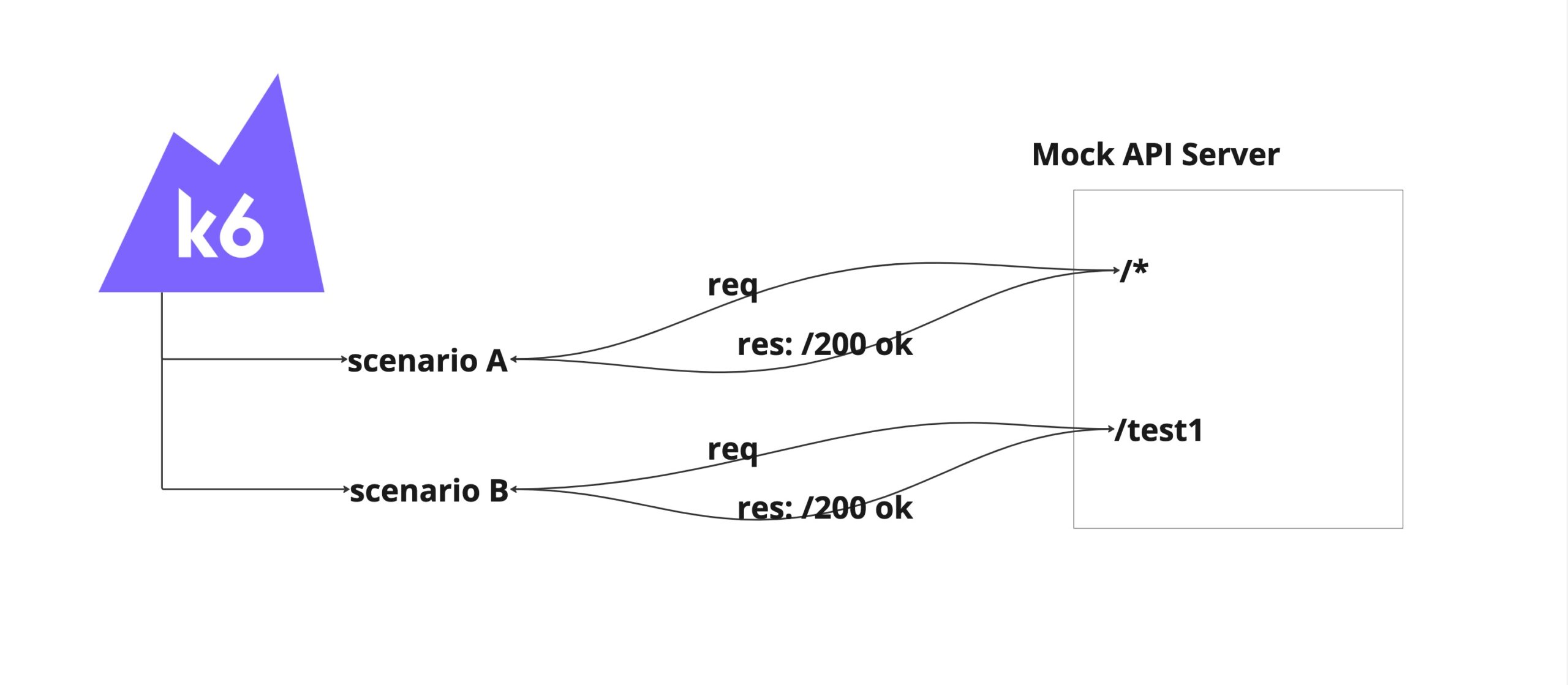

Here’s an image to illustrate the concept:

We’ll set up a Mock API Server using Express.

Each scenario will access the Mock API Server instead of the actual web server.

The Mock API Server will provide an API that returns a 200 OK response for any endpoint that’s called.

If you want to control specific Mock API responses, you can prepare APIs as needed.

Here’s what this setup can and cannot do:

- What it can do:

- Ensure the functionality of k6 scenarios

- What it cannot do:

- Ensure the behavior of functions or modules defined in k6

- Ensure that the API endpoint responses are correct

This approach is more like confirming that your scenarios run without errors.

For actual Mock API Server creation, refer to this PR:

https://github.com/gonkunkun/k6-template/pull/2

Let’s Set Up a Mock API Server

Now let’s actually set up the server.

You can refer to this directory structure:

https://github.com/gonkunkun/k6-template/

~/D/g/t/mock ❯❯❯ tree ./ -I node_modules

./

├── package-lock.json

├── package.json

├── src

│ ├── index.ts

│ └── sampleProduct

│ └── routes.ts

└── tsconfig.jsonSetting Up the Mock API Server

Prepare a package.json file like this:

{

"name": "test",

"version": "1.0.0",

"description": "",

"main": "src/index.ts",

"author": "",

"license": "ISC",

"devDependencies": {

"@types/express": "^4.17.21",

"express": "^4.19.2",

"ts-node": "^10.9.2",

"typescript": "^5.4.3"

}

}Next, install the modules:

~/D/g/template-of-k6 ❯❯❯ cd mock

~/D/g/t/mock ❯❯❯ npm install Add server configuration under the src directory.

Create src/index.ts as follows:

import express, { Request, Response } from 'express'

import sampleProductRouter from './sampleProduct/routes'

const app = express()

const port = process.argv || 3003

app.get('/', (req: Request, res: Response) => {

console.log(req.body)

res.status(200).json({ message: 'This is mock endpoint' })

})

app.use('/sampleProduct', sampleProductRouter)

app.listen(port, () => console.log(`Listening on port ${port}`))

|| 3003

app.get('/', (req: Request, res: Response) => {

console.log(req.body)

res.status(200).json({ message: 'This is mock endpoint' })

})

app.use('/sampleProduct', sampleProductRouter)

app.listen(port, () => console.log(`Listening on port ${port}`))

Now we’ve defined responses for the root /* path.

Considering that multiple products might use this k6 script in the future, let’s separate endpoints by product:

import { Router, Request, Response } from 'express'

const router = Router()

router.get('/sample', (req: Request, res: Response) => {

console.log(req.body)

res.status(200).json({ message: 'This is sampleProduct endpoint' })

})

router.use((req: Request, res: Response) => {

console.log(req.body)

res.status(200).json({ message: 'OK' })

})

export default router

Now we’ve defined responses for the root /sampleProduct path.

The API above only returns 200 OK.

If you want finer control over responses, such as for /sample/test, you can add new APIs as needed.

Once the Mock API Server is complete, start the server:

~/D/g/t/mock ❯❯❯ npx ts-node ./src/index 3005

Listening on port 3005Let’s try calling the API:

~/D/g/template-of-k6 ❯❯❯ curl -XGET localhost:3005/sampleProduct

{"message":"OK"}%

~/D/g/template-of-k6 ❯❯❯ curl -XGET localhost:3005/sampleProduct/test

{"message":"OK"}% Looks good.

Using the Mock API Server with k6

Now we just need to point the k6 execution endpoint to our mock.

In the sample app, environment variables are passed via .env, so modify the endpoint to something like SAMPLE_PRODUCT_ENDPOINT=http://localhost:3005.

https://github.com/gonkunkun/k6-template/blob/main/.env.sample#L4

Then just run k6:

~/D/g/template-of-k6 ❯❯❯ npm run smoke:sample-product ✘ 255 main ✱

/ |‾‾| /‾‾/ /‾‾/

/ / | |/ / / /

/ / | ( / ‾‾

/ | | | (‾) |

/ __________ |__| __ _____/ .io

execution: local

script: ./dist/loadTest.js

output: -

scenarios: (100.00%) 2 scenarios, 2 max VUs, 1m30s max duration (incl. graceful stop):

* sampleScenario1: 1 iterations for each of 1 VUs (maxDuration: 1m0s, exec: sampleScenario1, gracefulStop: 30s)

* sampleScenario2: 1 iterations for each of 1 VUs (maxDuration: 1m0s, exec: sampleScenario2, gracefulStop: 30s)

INFO[0003] 0se: == setup() BEGIN =========================================================== source=console

INFO[0003] 0se: Start of test: 2024-03-31 19:11:07 source=console

INFO[0003] 0se: Test environment: local source=console

INFO[0003] 0se: == Check scenario configurations ====================================================== source=console

INFO[0003] 0se: Scenario: sampleScenario1() source=console

INFO[0003] 0se: Scenario: sampleScenario2() source=console

INFO[0003] 0se: == Check scenario configurations FINISHED =============================================== source=console

INFO[0003] 0se: == Initialize Redis ====================================================== source=console

INFO[0003] 0se: == setup() END =========================================================== source=console

INFO[0009] 6se: Scenario sampleScenario1 is initialized. Lens is 10000 source=console

INFO[0009] 6se: Scenario sampleScenario2 is initialized. Lens is 10000 source=console

INFO[0009] 6se: == Initialize Redis FNISHED =============================================== source=console

INFO[0009] 6se: sampleScenario2() start ID: 2, vu iterations: 1, total iterations: 0 source=console

INFO[0009] 6se: sampleScenario1() start ID: 2, vu iterations: 1, total iterations: 0 source=console

INFO[0010] 7se: sampleScenario2() end ID: 2, vu iterations: 1, total iterations: 0 source=console

INFO[0010] 7se: sampleScenario1() end ID: 2, vu iterations: 1, total iterations: 0 source=console

INFO[0010] 0se: == All scenarios FINISHED =========================================================== source=console

INFO[0010] 0se: == Teardown() STARTED =========================================================== source=console

INFO[0010] 0se: == Initialize Redis ====================================================== source=console

INFO[0010] 0se: == Teardown() FINISHED =========================================================== source=console

INFO[0010] 0se: == Initialize Redis FINISHED =============================================== source=console

█ setup

█ sampleScenario2

✓ Status is 200

█ sampleScenario1

✓ Status is 200

█ teardown

checks.........................: 100.00% ✓ 2 ✗ 0

data_received..................: 152 kB 21 kB/s

data_sent......................: 939 kB 132 kB/s

group_duration.................: avg=621.43ms min=621.42ms med=621.43ms max=621.45ms p(90)=621.45ms p(95)=621.45ms

http_req_blocked...............: avg=395.64ms min=392.1ms med=395.64ms max=399.17ms p(90)=398.46ms p(95)=398.81ms

http_req_connecting............: avg=175.13ms min=175.12ms med=175.13ms max=175.14ms p(90)=175.14ms p(95)=175.14ms

http_req_duration..............: avg=213.6ms min=209.97ms med=213.6ms max=217.23ms p(90)=216.5ms p(95)=216.87ms

{ expected_response:true }...: avg=213.6ms min=209.97ms med=213.6ms max=217.23ms p(90)=216.5ms p(95)=216.87ms

http_req_failed................: 0.00% ✓ 0 ✗ 2

http_req_receiving.............: avg=390.5µs min=58µs med=390.49µs max=723µs p(90)=656.5µs p(95)=689.75µs

http_req_sending...............: avg=20.21ms min=15.2ms med=20.21ms max=25.21ms p(90)=24.21ms p(95)=24.71ms

http_req_tls_handshaking.......: avg=187.4ms min=183.88ms med=187.4ms max=190.93ms p(90)=190.23ms p(95)=190.58ms

http_req_waiting...............: avg=193ms min=191.96ms med=193ms max=194.04ms p(90)=193.83ms p(95)=193.93ms

http_reqs......................: 2 0.280001/s

iteration_duration.............: avg=1.93s min=507.73µs med=625.79ms max=6.49s p(90)=4.73s p(95)=5.61s

iterations.....................: 2 0.280001/s

vus............................: 2 min=0 max=2

vus_max........................: 2 min=2 max=2

running (0m07.1s), 0/2 VUs, 2 complete and 0 interrupted iterations

sampleScenario1 ✓ [======================================] 1 VUs 0m00.6s/1m0s 1/1 iters, 1 per VU

sampleScenario2 ✓ [======================================] 1 VUs 0m00.6s/1m0s 1/1 iters, 1 per VUNow we can test scenarios using the mock API server.

It’s convenient that we don’t need to set up an actual server.

Conclusion

In this article, we’ve set up a Mock API Server to ensure scenario functionality.

Once you’ve accomplished this, the next step is to incorporate these tests into CI.

The following article explains how to do that.

Feel free to check it out if you’re interested.

https://gonkunblog.com/k6-create-ci-for-scneario/1937/