While working through "Web Performance Tuning by Experts," I tried to identify and improve bottlenecks in a sample application.

Here’s a brief introduction to the workflow and commands I used.

If you want to learn more about the detailed thought process for bottleneck investigation and performance improvement, I recommend getting the book mentioned above.

Target Audience

- People who want to know the basic workflow for web performance testing

- People looking for useful commands to use during performance testing

What I Did

The basic workflow was as follows:

- Preparation

- Configure log output settings to check execution results

- Execute load testing

- Apply load using a benchmarking tool

- Monitor the system during load testing

- Check execution results to identify bottlenecks

- Perform tuning

Preparation

Complete log output configuration and command installation.

If you’ve followed the environment setup articles below, you should be ready to go.

https://gonkunblog.com/web-performance-handson-1/1268/

https://gonkunblog.com/web-performance-handson-2/1289/

Load Testing Execution

Apply load using a benchmark tool (I used the ab command introduced later).

During load testing, I monitored the system resource usage with commands like top and dstat.

- Is the CPU idle?

- Which part is becoming a bottleneck where requests are piling up?

I was monitoring with these types of questions in mind.

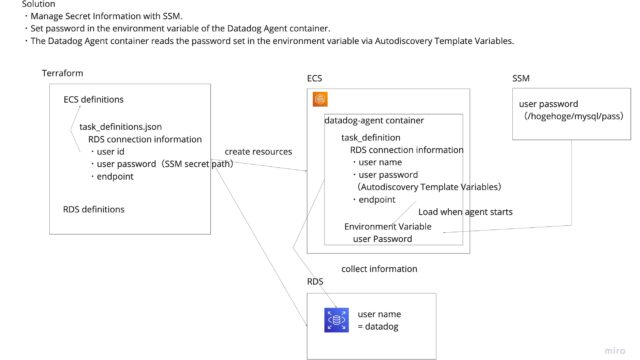

Bottleneck Investigation

After the load test is complete, examine the logs to identify bottlenecks.

For a simple web application configuration, you would typically check the following items first:

- Check the web server access logs

- Check SQL execution results (SlowQuery logs)

- Compare with system resource usage

If performance isn’t improving even though the system isn’t under heavy load (CPU isn’t maxed out), you should suspect bottlenecks somewhere in the process. There are several standard perspectives to consider.

The book introduces several perspectives which I found useful.

Performance Improvement

Here’s what I implemented within the scope of what could be fixed immediately:

- Adding indexes to database tables

- This alone increased throughput about 50 times

- Increasing the number of web server workers

- The CPU was underutilized

- When increasing request parallelism from 1 → 2 → 4, the wait time per request increased linearly

- After confirming the above conditions, I suspected that there were too few web server workers

For this kind of work, you need to accumulate experience and expand your toolkit.

Useful Commands

I’ve compiled command templates that are useful for bottleneck investigation and performance improvement.

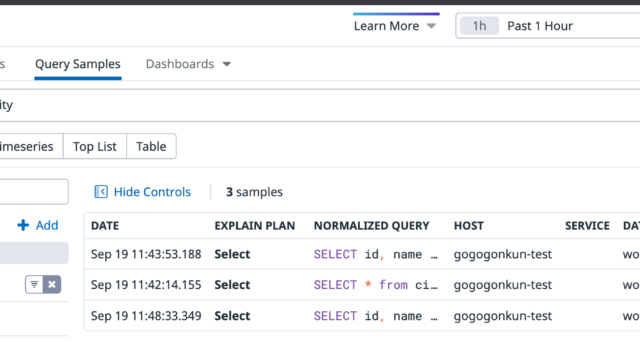

Access Log Analysis – alp command

This can be used to analyze nginx access logs.

GitHub: https://github.com/tkuchiki/alp

Aggregation targets:

timemethoduristatusresponse_timerequest_time

# Simple usage

cat access.log | alp json

+-------+-----+-----+-----+-----+-----+--------+----------------------+-------+-------+-------+-------+-------+-------+-------+--------+-------------+-------------+-------------+-------------+

| COUNT | 1XX | 2XX | 3XX | 4XX | 5XX | METHOD | URI | MIN | MAX | SUM | AVG | P90 | P95 | P99 | STDDEV | MIN(BODY) | MAX(BODY) | SUM(BODY) |AVG(BODY) |

+-------+-----+-----+-----+-----+-----+--------+----------------------+-------+-------+-------+-------+-------+-------+-------+--------+-------------+-------------+-------------+-------------+

| 1 | 0 | 1 | 0 | 0 | 0 | GET | /favicon.ico | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 43.000 | 43.000 | 43.000 | 43.000 |

| 1 | 0 | 1 | 0 | 0 | 0 | GET | /css/style.css | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 1549.000 | 1549.000 | 1549.000 | 1549.000 |

| 1 | 0 | 1 | 0 | 0 | 0 | GET | /img/ajax-loader.gif | 0.004 | 0.004 | 0.004 | 0.004 | 0.004 | 0.004 | 0.004 | 0.000 | 673.000 | 673.000 | 673.000 | 673.000 |

# Add -r for descending order. Sorts by most accessed URIs

cat access.log | alp json -r

# Use --sort=xxx to sort by specified column. Sort by total response time per URI

cat access.log | alp json --sort=sum -r

# Sort by average response time per URI

cat access.log | alp json --sort=avg -r

# Use -o xxx,xxx, ... to narrow down displayed content

cat access.log | alp json -o count,method,uri,min,avg,max

+-------+--------+-----+-------+-------+-------+

| COUNT | METHOD | URI | MIN | AVG | MAX |

+-------+--------+-----+-------+-------+-------+

| 10 | GET | / | 1.360 | 1.365 | 1.372 |

+-------+--------+-----+-------+-------+-------+

# avg, sum, p99 are commonly used

cat access.log | alp json -o count,method,uri,avg,sum,p99 -r

# To aggregate query parts like '/image/1863.jpg'

cat access.log | alp json -o count,method,uri,avg,sum,p99 -r -m "/image/[0-9]+"

+-------+--------+----------------------+-------+-------+-------+

| COUNT | METHOD | URI | AVG | SUM | P99 |

+-------+--------+----------------------+-------+-------+-------+

| 185 | GET | /image/[0-9]+ | 0.002 | 0.416 | 0.024 |

| 8 | GET | / | 0.089 | 0.712 | 0.256 |

# Find requests with 5xx errors

cat access.log | alp json -o count,method,uri,5xx -r

# If the access log is in default format instead of json

cat access.log | alp regexp

Quite convenient. It might be better than manually grepping through access logs during an incident.

Benchmarking Tool – ab command

You can quickly apply load by specifying concurrency and requests.

Installing the benchmarking tool:

sudo apt update

sudo apt install apache2-utils

# Basic usage

# Send 10 requests with concurrency level 1 to localhost

ab -c 1 -n 10 http://localhost/

# Enable KeepAlive

ab -c 1 -n 10 -k http://localhost/

# Send requests for 30 seconds

ab -c 1 -t 30 http://localhost/

# Don't fail if response size varies between requests. Useful for dynamic pages.

ab -c 1 -t 30 -l http://localhost/

# For POST requests, it's a bit more complex

# Use -p to specify a file containing the POST body

ab -c 1 -t 30 -p ./sample.json -T "application/json; charset=utf-8" "http://localhost/hogehoge"

Good for unit-level testing.

Not suitable when complex scenarios are needed.

# Brief explanation of results

$ ab -c 1 -n 10 http://localhost/

This is ApacheBench, Version 2.3 <$Revision: 1879490 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking localhost (be patient).....done

Server Software: nginx/1.18.0

Server Hostname: localhost

Server Port: 80

Document Path: /

Document Length: 35644 bytes

Concurrency Level: 1 # Concurrency level

Time taken for tests: 13.651 seconds # Total elapsed time

Complete requests: 10 # Number of successful requests

Failed requests: 0 # Number of failed requests

Total transferred: 360160 bytes # Total bytes transferred from server

HTML transferred: 356440 bytes

Requests per second: 0.73 [#/sec] (mean) # Number of requests processed per second

Time per request: 1365.054 [ms] (mean) # Elapsed time for processing one request

Time per request: 1365.054 [ms] (mean, across all concurrent requests)

Transfer rate: 25.77 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.0 0 0

Processing: 1360 1365 3.8 1365 1372

Waiting: 1360 1365 3.8 1365 1372

Total: 1360 1365 3.8 1365 1372

Percentage of the requests served within a certain time (ms)

50% 1365

66% 1366

75% 1367

80% 1369

90% 1372

95% 1372

98% 1372

99% 1372

100% 1372 (longest request)

```

Requests per second: 0.73 [#/sec] (mean) # Number of requests processed per second

Time per request: 1365.054 [ms] (mean) # Elapsed time for processing one request

Throughput and processing time are key metrics to look at.

Slow Query Analysis – mysqldumpslow

Used to aggregate SlowQuery logs.

Comes with MySQL installation, so no additional installation is needed.

# Output sorted by total execution time

sudo mysqldumpslow /var/log/mysql/mysql-slow.log

# Output looks like this

Reading mysql slow query log from /var/log/mysql/mysql-slow.log

Count: 1716 Time=0.05s (77s) Lock=0.00s (0s) Rows=3.0 (5148), isuconp[isuconp]@localhost

SELECT * FROM comments WHERE post_id = N ORDER BY created_at DESC LIMIT N

Even if the execution time of each query is short, if a huge number of queries are executed, the proportion of query execution time in the total processing time can be enormous, so be careful.

You can identify bottleneck causes by examining query execution time, Rows (how many rows were accessed to return n rows), etc.

Monitoring CPU, Memory, Processes – top

You can monitor various metrics.

If you have 2 or more CPU cores, be careful as the meaning of the CPU denominator changes between the upper and lower parts. I often find myself thinking "Wait, which one was it?"

The upper part uses all machine CPUs as the denominator.

The lower part is per CPU core. With many cores in the lower part, the total CPU usage can exceed 100%.

System Metrics Display – dstat

You can easily monitor CPU usage with the dstat –cpu command.

GitHub: https://github.com/dstat-real/dstat

$ dstat --cpu

--total-cpu-usage--

usr sys idl wai stl

4 1 95 0 0

0 0 100 0 0

0 0 100 0 0

0 0 99 0 0

0 0 100 0 0

0 0 100 0 0

15 3 81 0 0

48 9 43 0 0

50 8 42 0 0

48 9 43 0 0

49 9 43 0 0

47 9 44 0 0

49 7 44 0 0

idl: Indicates that the CPU is not processing and is idle.

I've used vmstat until now, but this is quite easier to read.

Development has stopped, and dool is being developed as its successor.

GitHub: https://github.com/scottchiefbaker/dool

Conclusion

I’ve briefly introduced the load testing workflow and a collection of useful commands.

I’ve prepared for load testing with scenarios before, but I learned a lot from simply applying load with the ab command.

That’s all.